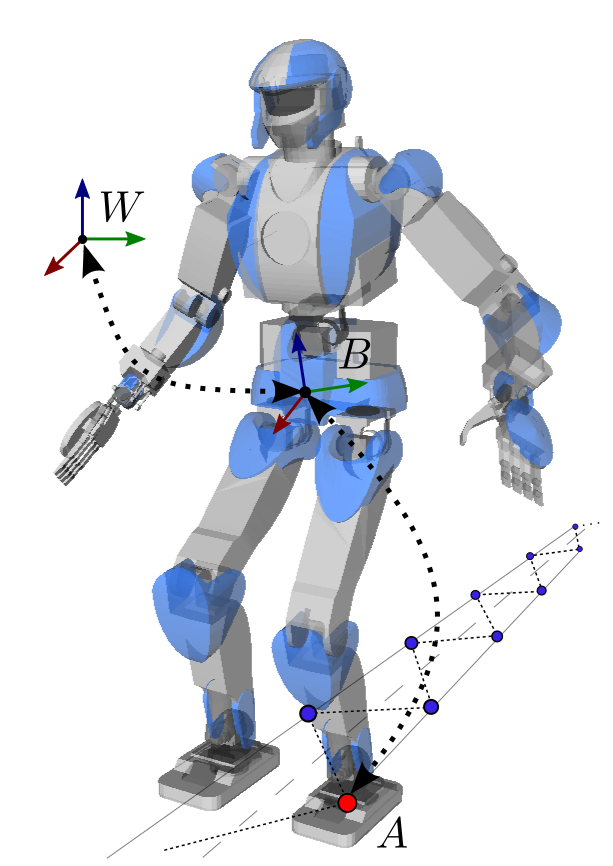

For a legged system, locomotion is about controlling the unactuated floating

base to a desired

location in the world. Before a control action can be applied, the first thing

is to estimate the position and orientation of this floating base. In what

follows, we will see the simple anchor point solution, which is a good way to

get started and works well in practice. For example, it is the one used in

this stair climbing experiment .

Orientation of the floating base

We assume that our legged robot is equipped with an inertial measurement unit

(IMU) in its pelvis link, and attach the base frame B \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

B B B ω B \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

{}^B\bfomega_B B ω B B a \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

{}^B\bfa B a W R B \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

{}^W\bfR_B W R B W \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

W W

Integration of angular velocities

Let us select our world frame W \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

W W t 0 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

t_0 t 0 R k i \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfR^i_k R k i B \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

B B represented by the

rotation matrix that maps vectors from B \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

B B W \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

W W i \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

i i integration . With these notations, we have

by definition:

R 0 i = W R B ( t 0 ) = I 3 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfR^i_{0} = {}^W\bfR_B(t_0) = \bfI_3 R 0 i = W R B ( t 0 ) = I 3 with I 3 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfI_3 I 3 3 × 3 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

3 \times 3 3 × 3 t k \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

t_k t k B ω B ( t k ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

{}^B

\bfomega_B(t_k) B ω B ( t k ) B \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

B B B \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

B B ω k : = B ω B ( t k ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfomega_k := {}^B

\bfomega_B(t_k) ω k := B ω B ( t k )

R k + 1 i = R k i exp ( [ ω k × ] Δ t ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfR^i_{k+1} = \bfR^i_{k} \exp([\bfomega_k \times] \Delta t) R k + 1 i = R k i exp ([ ω k × ] Δ t ) with Δ t = t k − t k − 1 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\Delta t = t_k - t_{k-1} Δ t = t k − t k − 1 [ ω k × ] \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

[\bfomega_k \times] [ ω k × ] Rodrigues' rotation formula :

R k + 1 i = R k i ( I 3 + sin θ k θ k [ ω k × ] + 1 − cos θ k θ k 2 [ ω k × ] 2 ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfR^i_{k+1} = \bfR^i_{k} \left(\bfI_3 + \frac{\sin \theta_k}{\theta_k} [\bfomega_k \times] + \frac{1 - \cos \theta_k}{\theta_k^2} [\bfomega_k \times]^2 \right) R k + 1 i = R k i ( I 3 + θ k sin θ k [ ω k × ] + θ k 2 1 − cos θ k [ ω k × ] 2 ) with θ k = ∥ ω k ∥ Δ t \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\theta_k = \| \omega_k \| \Delta t θ k = ∥ ω k ∥Δ t

Accelerometer-based estimation

Accelerometers can be used to provide an alternative estimate of the floating

base orientation. When the robot is not moving, what these accelerometers see

on average is the gravity vector B g \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

{}^B\bfg B g B \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

B B W g ≈ [ 0 0 − 9.81 ] \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

{}^W\bfg \approx [0 \, 0 \, {-9.81}] W g ≈ [ 0 0 − 9.81 ] R g = W R B \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfR^g = {}^W \bfR_B R g = W R B the rotation

matrix that sends

B g \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

{}^B \bfg B g W g \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

{}^W \bfg W g g \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}