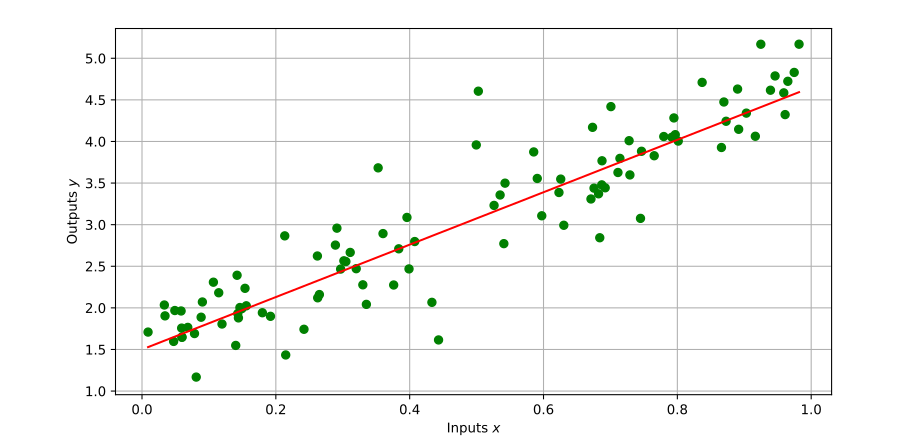

Simple linear regression is a particular case of linear regression where we

assume that the output y ∈ R \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

y \in \mathbb{R} y ∈ R x ∈ R \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

x \in \mathbb{R} x ∈ R

y = α + β x \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

y = \alpha + \beta x y = α + β x We gather observations ( x i , y i ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

(x_i, y_i) ( x i , y i ) ( α , β ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

(\alpha, \beta) ( α , β )

Compared to a full-fledged linear regression , simple linear regression

has a number of properties we can use for faster computations and online

updates. In this post, we will see how to implement it in Python, first with a

fixed dataset, then with online updates, and finally with a sliding-window

variant where recent observations have more weight than older ones.

Linear regression

Let us start with a vanilla solution using an ordinary linear regression

solver. We stack our inputs into a vector x = [ x 1 , … , x N ] ⊤ \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfx = [x_1, \ldots,

x_N]^\top x = [ x 1 , … , x N ] ⊤ y = [ y 1 , … , y N ] ⊤ \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfy = [y_1, \ldots,

y_N]^\top y = [ y 1 , … , y N ] ⊤

m i n i m i z e α , β ∥ y − α 1 − β x ∥ 2 2 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\underset{\alpha, \beta}{\mathrm{minimize}} \ \| \bfy - \alpha \bfone - \beta \bfx \|_2^2 α , β minimize ∥ y − α 1 − β x ∥ 2 2 where 1 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfone 1 x \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfx x y \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfy y α \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\alpha α intercept while β \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\beta β slope (or coefficient).

Using a machine learning library

For our tests, we will generate data using the

same model as for the figure above:

import numpy as np

def generate_dataset ( size = 10000 , alpha = 1.5 , beta = 3.15 ):

x = np . random . random ( size )

y = alpha + beta * x + np . random . normal ( 0 , 0.4 , size = x . shape )

return ( x , y )

Any machine learning library will happily estimate the intercept and

coefficients of a linear model from such observation data. Try it out with your

favorite one! Here is for example how linear regression goes with scikit-learn :

import sklearn.linear_model

def simple_linear_regression_sklearn ( x , y ):

regressor = sklearn . linear_model . LinearRegression ()

regressor . fit ( x . reshape ( - 1 , 1 ), y )

intercept = regressor . intercept_

slope = regressor . coef_ [ 0 ]

return ( intercept , slope )

Yet, our problem is simpler than a general linear regression. What if we solved

the least squares problem

directly?

Using a least squares solver

We can rewrite our objective function in matrix form as:

∥ y − α 1 − β x ∥ 2 2 = ∥ [ 1 x ] [ α β ] − y ∥ 2 2 = ∥ R x − y ∥ 2 2 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\| \bfy - \alpha \bfone - \beta \bfx \|_2^2

= \left\|

\begin{bmatrix} \bfone & \bfx \end{bmatrix}

\begin{bmatrix} \alpha \\ \beta \end{bmatrix}

- \bfy

\right\|_2^2

= \| \bfR \bfx - \bfy \|_2^2 ∥ y − α 1 − β x ∥ 2 2 = [ 1 x ] [ α β ] − y 2 2 = ∥ R x − y ∥ 2 2 with R \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfR R 2 × N \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

2 \times N 2 × N x \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfx x solve_ls

function from qpsolvers :

import qpsolvers

def simple_linear_regression_ls ( x , y , solver = "quadprog" ):

shape = ( x . shape [ 0 ], 1 )

R = np . hstack ([ np . ones ( shape ), x . reshape ( shape )])

intercept , slope = qpsolvers . solve_ls ( R , s = y , solver = solver )

return ( intercept , slope )

This results in a faster implementation than the previous one, for instance on

my machine:

In [1]: x, y = generate_dataset(1000 * 1000)

In [2]: %timeit simple_linear_regression_sklearn(x, y)

21.2 ms ± 22.8 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

In [3]: %timeit simple_linear_regression_ls(x, y, solver="quadprog")

11.4 ms ± 73.9 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

The improvement may be due to avoiding extra conversions inside

scikit-learn, or because the solver it uses internally is less

efficient than quadprog for this type

of problems. It is more significant for small datasets, and ultimately settles

around 2 × \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

2\times 2 × R \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfR R simple linear regression.

Simple linear regression

Let us expand our objective function using the vector dot product:

∥ y − ( α 1 + β x ) ∥ 2 2 = ( ( α 1 + β x ) − y ) ⋅ ( ( α 1 + β x ) − y ) = ∥ α 1 + β x ∥ 2 2 − 2 y ⋅ ( α 1 + β x ) + ( y ⋅ y ) = α 2 ( 1 ⋅ 1 ) + 2 α β ( 1 ⋅ x ) + β 2 ( x ⋅ x ) − 2 ( 1 ⋅ y ) α − 2 ( x ⋅ y ) β + ( y ⋅ y ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\begin{align*}

& \| \bfy - (\alpha \bfone + \beta \bfx) \|_2^2 \\

& = ((\alpha \bfone + \beta \bfx) - \bfy) \cdot ((\alpha \bfone + \beta \bfx) - \bfy) \\

& = \| \alpha \bfone + \beta \bfx \|_2^2 - 2 \bfy \cdot (\alpha \bfone + \beta \bfx) + (\bfy \cdot \bfy) \\

& = \alpha^2 (\bfone \cdot \bfone) + 2 \alpha \beta (\bfone \cdot \bfx) + \beta^2 (\bfx \cdot \bfx) - 2 (\bfone \cdot \bfy) \alpha - 2 (\bfx \cdot \bfy) \beta + (\bfy \cdot \bfy)

\end{align*} ∥ y − ( α 1 + β x ) ∥ 2 2 = (( α 1 + β x ) − y ) ⋅ (( α 1 + β x ) − y ) = ∥ α 1 + β x ∥ 2 2 − 2 y ⋅ ( α 1 + β x ) + ( y ⋅ y ) = α 2 ( 1 ⋅ 1 ) + 2 α β ( 1 ⋅ x ) + β 2 ( x ⋅ x ) − 2 ( 1 ⋅ y ) α − 2 ( x ⋅ y ) β + ( y ⋅ y ) Since this function is strictly convex, its global minimum is the (unique)

critical point

where its gradient vanishes:

∂ ∂ α ∥ y − ( α 1 + β x ) ∥ 2 2 = 0 ∂ ∂ β ∥ y − ( α 1 + β x ) ∥ 2 2 = 0 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\begin{align*}

\frac{\partial}{\partial \alpha} \| \bfy - (\alpha \bfone + \beta \bfx) \|_2^2 & = 0 &

\frac{\partial}{\partial \beta} \| \bfy - (\alpha \bfone + \beta \bfx) \|_2^2 & = 0

\end{align*} ∂ α ∂ ∥ y − ( α 1 + β x ) ∥ 2 2 = 0 ∂ β ∂ ∥ y − ( α 1 + β x ) ∥ 2 2 = 0 Using the dot product expansion, this condition becomes:

2 ( 1 ⋅ 1 ) α + 2 ( 1 ⋅ x ) β + 0 − 2 ( 1 ⋅ y ) + 0 + 0 = 0 0 + 2 ( 1 ⋅ x ) α + 2 ( x ⋅ x ) β − 0 − 2 ( x ⋅ y ) + 0 = 0 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\begin{align*}

2 (\bfone \cdot \bfone) \alpha + 2 (\bfone \cdot \bfx) \beta + 0 - 2 (\bfone \cdot \bfy) + 0 + 0 & = 0 \\

0 + 2 (\bfone \cdot \bfx) \alpha + 2 (\bfx \cdot \bfx) \beta - 0 - 2 (\bfx \cdot \bfy) + 0 & = 0

\end{align*} 2 ( 1 ⋅ 1 ) α + 2 ( 1 ⋅ x ) β + 0 − 2 ( 1 ⋅ y ) + 0 + 0 0 + 2 ( 1 ⋅ x ) α + 2 ( x ⋅ x ) β − 0 − 2 ( x ⋅ y ) + 0 = 0 = 0 We end up with a 2 × 2 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

2 \times 2 2 × 2

( 1 ⋅ 1 ) α + ( 1 ⋅ x ) β = ( 1 ⋅ y ) ( 1 ⋅ x ) α + ( x ⋅ x ) β = ( x ⋅ y ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\begin{align*}

(\bfone \cdot \bfone) \alpha + (\bfone \cdot \bfx) \beta & = (\bfone \cdot \bfy) \\

(\bfone \cdot \bfx) \alpha + (\bfx \cdot \bfx) \beta & = (\bfx \cdot \bfy)

\end{align*} ( 1 ⋅ 1 ) α + ( 1 ⋅ x ) β ( 1 ⋅ x ) α + ( x ⋅ x ) β = ( 1 ⋅ y ) = ( x ⋅ y ) We know its analytical solution by (manual Gaussian elimination or remembering

the formula for) Cramer's rule :

α = ( 1 ⋅ y ) ( x ⋅ x ) − ( x ⋅ y ) ( 1 ⋅ x ) ( 1 ⋅ 1 ) ( x ⋅ x ) − ( 1 ⋅ x ) 2 β = ( 1 ⋅ 1 ) ( x ⋅ y ) − ( 1 ⋅ x ) ( 1 ⋅ y ) ( 1 ⋅ 1 ) ( x ⋅ x ) − ( 1 ⋅ x ) 2 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\begin{align*}

\alpha & = \frac{(\bfone \cdot \bfy) (\bfx \cdot \bfx) - (\bfx \cdot \bfy) (\bfone \cdot \bfx)}{(\bfone \cdot \bfone) (\bfx \cdot \bfx) - (\bfone \cdot \bfx)^2} &

\beta & = \frac{(\bfone \cdot \bfone) (\bfx \cdot \bfy) - (\bfone \cdot \bfx) (\bfone \cdot \bfy)}{(\bfone \cdot \bfone) (\bfx \cdot \bfx) - (\bfone \cdot \bfx)^2}

\end{align*} α = ( 1 ⋅ 1 ) ( x ⋅ x ) − ( 1 ⋅ x ) 2 ( 1 ⋅ y ) ( x ⋅ x ) − ( x ⋅ y ) ( 1 ⋅ x ) β = ( 1 ⋅ 1 ) ( x ⋅ x ) − ( 1 ⋅ x ) 2 ( 1 ⋅ 1 ) ( x ⋅ y ) − ( 1 ⋅ x ) ( 1 ⋅ y ) The numerators and denominator of these two expressions have a statistical

interpretation detailed in fitting the regression line

(Wikipedia). The denominator is positive by the Cauchy-Schwarz inequality . It can be

zero in the degenerate case where x = x 1 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\bfx = x \bfone x = x 1

def simple_linear_regression_cramer ( x , y ):

dot_1_1 = x . shape [ 0 ] # == np.dot(ones, ones)

dot_1_x = x . sum () # == np.dot(x, ones)

dot_1_y = y . sum () # == np.dot(y, ones)

dot_x_x = np . dot ( x , x )

dot_x_y = np . dot ( x , y )

det = dot_1_1 * dot_x_x - dot_1_x ** 2 # not checking det == 0

intercept = ( dot_1_y * dot_x_x - dot_x_y * dot_1_x ) / det

slope = ( dot_1_1 * dot_x_y - dot_1_x * dot_1_y ) / det

return intercept , slope

This implementation is not only faster than the previous ones, it's also the

first one where we leveraged some particular structure of our problem:

In [1]: x, y = generate_dataset(1000 * 1000)

In [2]: %timeit simple_linear_regression_ls(x, y, solver="quadprog")

11.4 ms ± 36 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

In [3]: %timeit simple_linear_regression_cramer(x, y)

3.72 ms ± 160 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

We can use some IPython magic to compare the three approaches we have seen so

far in a quick benchmark:

sizes = sorted ([ k * 10 ** d for k in [ 2 , 5 , 10 ] for d in range ( 6 )])

for size in sizes :

x , y = generate_dataset ( size )

instructions = [

"simple_linear_regression_sklearn(x, y)" ,

'simple_linear_regression_ls(x, y, solver="quadprog")' ,

"simple_linear_regression_cramer(x, y)" ,

]

for instruction in instructions :

print ( instruction ) # helps manual labor to gather the numbers ;)

get_ipython () . magic ( f "timeit { instruction } " )

Here is the outcome in a plot (raw data in the image's alt attribute):

We now have a reasonably efficient solution for simple linear regression over

small- to medium-sized (millions of entries) datasets. All the solutions we

have seen so far have been offline, meaning the dataset was generated then

processed all at once. Let us now turn to the online case, where new

observations ( x i , y i ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

(x_i, y_i) ( x i , y i )

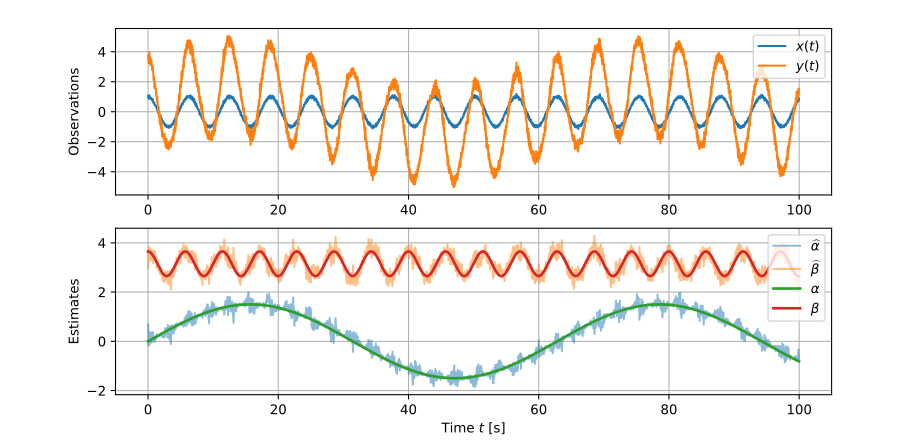

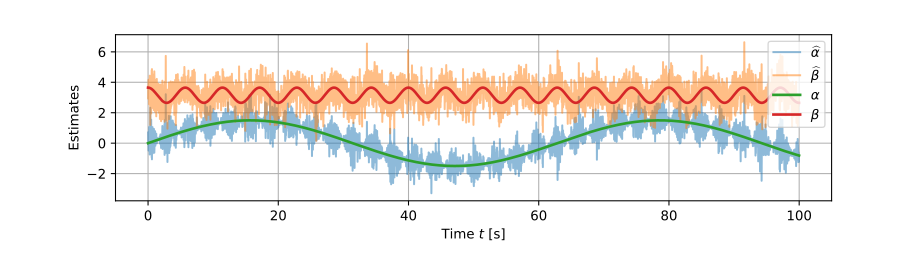

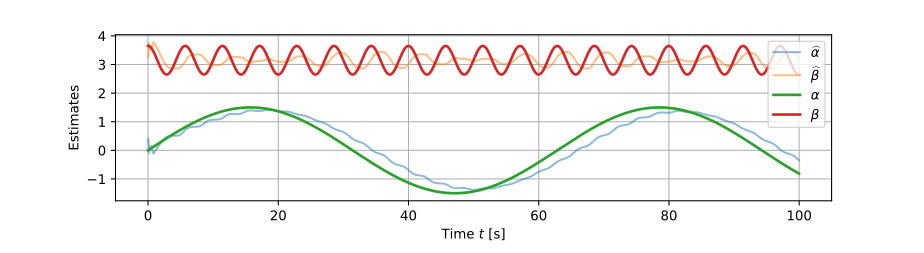

Online simple linear regression

The dot products we use to calculate the intercept α \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\alpha α β \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}