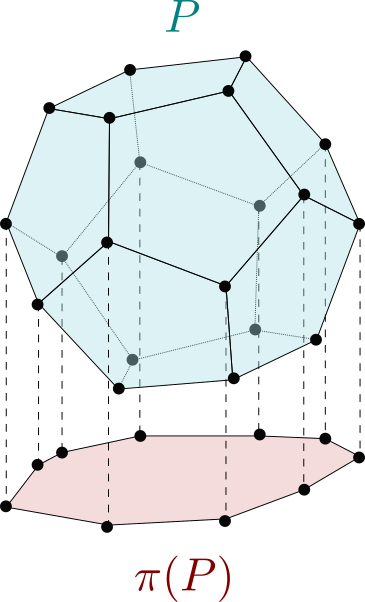

Consider a polytope given in halfspace representation by:

P = { x ∈ R n , y ∈ R m , A x + B y ≤ c } \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

{P} = \{ x \in \mathbb{R}^n, y \in \mathbb{R}^m, A x + B y \leq c \} P = { x ∈ R n , y ∈ R m , A x + B y ≤ c } The polytope π ( P ) = { y ∈ R m , B ′ y ≤ c ′ } \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\pi({P}) = \{ y \in \mathbb{R}^m, B' y \leq c' \} π ( P ) = { y ∈ R m , B ′ y ≤ c ′ } orthogonal projection of P \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

{P} P

y ∈ π ( P ) ⇔ ∃ x ∈ R n , ( x , y ) ∈ P \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

y \in \pi({P}) \Leftrightarrow \exists x \in \mathbb{R}^n, (x, y) \in {P} y ∈ π ( P ) ⇔ ∃ x ∈ R n , ( x , y ) ∈ P More generally, an affine projection of P \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

{P} P

π ( P ) = { y ∈ R m ∣ ∃ z ∈ P , y = E z + f } \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\pi(P) = \{ y \in \mathbb{R}^m \, | \, \exists z \in {P}, y = E z + f \} π ( P ) = { y ∈ R m ∣ ∃ z ∈ P , y = E z + f } In what follows, given a halfspace representation of P \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

P P π ( P ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\pi(P) π ( P )

Double description method

Projecting is easy in vertex representation: for a polytope P = c o n v ( V ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

P =

\mathrm{conv}(V) P = conv ( V ) π ( P ) = { E v + f , v ∈ V } \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\pi(P) = \{ E v

+ f, v \in V\} π ( P ) = { E v + f , v ∈ V }

Enumerate vertices of the halfspace representation of P \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

P P double description method

Project the vertices to π ( V ) = { E v + f , v ∈ V } \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\pi(V) = \{ E v + f, v \in V \} π ( V ) = { E v + f , v ∈ V }

Apply the double description method again to convert the vertex

representation π ( V ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\pi(V) π ( V )

The cdd library can be

used for this purpose. One limitation of this approach is that it can be

numerically unstable, and becomes significantly slower as the dimension of the

polytope increases.

Open source software

An implementation of this method is available in the projection submodule of the

pypoman Python library.

Ray shooting methods

Ray shooting methods provide a better alternative when the size of the

π ( P ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\pi(P) π ( P ) m \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

m m output-sensitive algorithms: their

complexity depends on the number of facets of π ( P ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\pi(P) π ( P ) McMullen's Upper Bound Theorem .

Projecting to a 2D polygon

The 2D ray shooting algorithm was introduced in robotics by Bretl and Lall to

compute the static-equilibrium polygon of a legged robots in multi-contact . It was used in subsequent

humanoid works to compute other criteria such as multi-contact ZMP support

areas and CoM acceleration cones .

The core idea of the algorithm goes as follows: let θ \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\theta θ u θ = [ cos ( θ ) sin ( θ ) ] \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

u_\theta = [\cos(\theta) \, \sin(\theta)] u θ = [ cos ( θ ) sin ( θ )]

maximize x ∈ R n , y ∈ R m u θ T y subject to A x ≤ b C x = d y = E x + f \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\begin{array}{rl}

\underset{x \in \mathbb{R}^n, y \in \mathbb{R}^m}{\text{maximize}} & u_\theta^T y \\

\text{subject to} & A x \leq b \\

& C x = d \\

& y = E x + f

\end{array} x ∈ R n , y ∈ R m maximize subject to u θ T y A x ≤ b C x = d y = E x + f This LP computes simultaneously x ∈ P \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

x \in P x ∈ P y = π ( x ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

y =

\pi(x) y = π ( x ) u θ \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

u_\theta u θ y ∗ \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

y^* y ∗ the

point of π ( P ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\pi(P) π ( P ) furthest away in the direction of u θ \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

u_\theta u θ

y ∗ \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

y^* y ∗ vertex of π ( P ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\pi(P) π ( P ) u θ \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

u_\theta u θ supporting hyperplane of

π ( P ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

\pi(P) π ( P ) y ∗ \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

y^* y ∗

A supporting hyperplane (or line, since we are in 2D) is a plane tangent to the

polygon at a given point. Formally, it separates the plane in two: one side

S 0 \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}

\def\bfN{\boldsymbol{N}}

\def\bfO{\boldsymbol{O}}

\def\bfP{\boldsymbol{P}}

\def\bfQ{\boldsymbol{Q}}

\def\bfR{\boldsymbol{R}}

\def\bfS{\boldsymbol{S}}

\def\bfT{\boldsymbol{T}}

\def\bfU{\boldsymbol{U}}

\def\bfV{\boldsymbol{V}}

\def\bfW{\boldsymbol{W}}

\def\bfX{\boldsymbol{X}}

\def\bfY{\boldsymbol{Y}}

\def\bfZ{\boldsymbol{Z}}

\def\bfalpha{\boldsymbol{\alpha}}

\def\bfa{\boldsymbol{a}}

\def\bfbeta{\boldsymbol{\beta}}

\def\bfb{\boldsymbol{b}}

\def\bfcd{\dot{\bfc}}

\def\bfchi{\boldsymbol{\chi}}

\def\bfc{\boldsymbol{c}}

\def\bfd{\boldsymbol{d}}

\def\bfe{\boldsymbol{e}}

\def\bff{\boldsymbol{f}}

\def\bfgamma{\boldsymbol{\gamma}}

\def\bfg{\boldsymbol{g}}

\def\bfh{\boldsymbol{h}}

\def\bfi{\boldsymbol{i}}

\def\bfj{\boldsymbol{j}}

\def\bfk{\boldsymbol{k}}

\def\bflambda{\boldsymbol{\lambda}}

\def\bfl{\boldsymbol{l}}

\def\bfm{\boldsymbol{m}}

\def\bfn{\boldsymbol{n}}

\def\bfomega{\boldsymbol{\omega}}

\def\bfone{\boldsymbol{1}}

\def\bfo{\boldsymbol{o}}

\def\bfpdd{\ddot{\bfp}}

\def\bfpd{\dot{\bfp}}

\def\bfphi{\boldsymbol{\phi}}

\def\bfp{\boldsymbol{p}}

\def\bfq{\boldsymbol{q}}

\def\bfr{\boldsymbol{r}}

\def\bfsigma{\boldsymbol{\sigma}}

\def\bfs{\boldsymbol{s}}

\def\bftau{\boldsymbol{\tau}}

\def\bftheta{\boldsymbol{\theta}}

\def\bft{\boldsymbol{t}}

\def\bfu{\boldsymbol{u}}

\def\bfv{\boldsymbol{v}}

\def\bfw{\boldsymbol{w}}

\def\bfxi{\boldsymbol{\xi}}

\def\bfx{\boldsymbol{x}}

\def\bfy{\boldsymbol{y}}

\def\bfzero{\boldsymbol{0}}

\def\bfz{\boldsymbol{z}}

\def\defeq{\stackrel{\mathrm{def}}{=}}

\def\p{\boldsymbol{p}}

\def\qdd{\ddot{\bfq}}

\def\qd{\dot{\bfq}}

\def\q{\boldsymbol{q}}

\def\xd{\dot{x}}

\def\yd{\dot{y}}

\def\zd{\dot{z}}

S_0 S 0 π ( P ) \def\bfA{\boldsymbol{A}}

\def\bfB{\boldsymbol{B}}

\def\bfC{\boldsymbol{C}}

\def\bfD{\boldsymbol{D}}

\def\bfE{\boldsymbol{E}}

\def\bfF{\boldsymbol{F}}

\def\bfG{\boldsymbol{G}}

\def\bfH{\boldsymbol{H}}

\def\bfI{\boldsymbol{I}}

\def\bfJ{\boldsymbol{J}}

\def\bfK{\boldsymbol{K}}

\def\bfL{\boldsymbol{L}}

\def\bfM{\boldsymbol{M}}