ICRA 2014 Workshop

Robotics and Military Applications :

From Current Research and Deployments to Legal and Ethical Questions

Location and time

Room S421, Hong Kong Convention and Exhibition Centre

June 1, 2014 (Sun), 09:00 – 15:40

Program

| 09:00 | Start |

| 09:00 – 09:20 | Ludovic Righetti : Introduction |

| 09:20 – 09:55 | George Bekey : "Some ethical dilemmas in military robotics" |

| 09:55 – 10:30 | Noel Sharkey : "Do you want our robots to kill and maim in your name ?" |

| 10:30 – 10:50 | Coffee Break |

| 10:50 – 11:10 | Anthony Finn : "Lethal Autonomous Robots" |

| 11:10 – 11:30 | Robert Sparrow : "Working for war ? Military robotics and the moral responsibilities of engineers" |

| 11:30 – 11:50 | Timothy Bretl : "Thoughts from a robotics researcher" |

| 11:50 – 12:30 | Panel discussion 1 : Ethical problems of autonomous military robots |

| 12:30 – 14:00 | Lunch Break |

| 14:00 – 14:35 | Paul Scharre : "U.S. Department of Defense policy on autonomy in weapon systems" |

| 14:35 – 15:10 | Stephan Sonnenberg : "Why drones are different" |

| 15:10 – 15:40 | Panel discussion 2 : Impact of current and future deployments of military robots |

| 15:40 | End |

Topics

- Current deployments of military robots and implications (effectiveness, casualties, consequences on civilian populations,...)

- Legal and ethical issues of military robots

- Current and future research topics in robotics connected with military applications

- Funding of scientific research by military agencies

Military robots are no more restricted to science-fiction novels or to a distant future but have become a concrete reality. Autonomous aerial robots – or drones – are for instance currently deployed, mainly by the US Army, in several countries such as Afghanistan, Pakistan, Yemen, or Somalia. According to independent investigations, such robots have caused several thousands of casualties, including a non-negligible number of civilians. As a result, increased autonomy in weapon systems is focusing a large attention from civil society, prompting field researches on the consequences of the use of drones, as well as legal and ethical debates about the increasing use of robotics technology in the military.

At the same time, within the academic robotics research community, there are a considerable number of programs that are motivated by military applications and/or funded by military agencies. Many of the advances obtained in such programs have been or will be used in building operational military robots. It is thus evident that robotics researchers, who stand at the very beginning of the chain that eventually leads to operational military robots, must take an important part in the societal debate mentioned above.

The goal of this workshop is to stimulate the debate on military applications within the robotics community. To this end, prominent speakers from academia, NGOs, and governmental agencies will present facts and data about the current research and deployments of military robots (technologies, motivations, casualties, economic and psychological consequences on the concerned populations,...), as well as elements of legal and ethical reflections about military robots.

In contrast with existing efforts on "Roboethics", the focus here is exclusively on military robots and the burning issues raised by their ongoing deployments. We hope that this workshop will help stimulating the reflections of robotics researchers as individuals but also as a community about the issue of military robots, so that they can also contribute in return to this important societal debate.

Organizers

- Quang-Cuong Pham, Nanyang Technological University, Singapore [cuong.pham {at} normalesup.org]

- Ludovic Righetti, Max Planck Institute, Germany [ludovic.righetti {at} tuebingen.mpg.de]

- Max Planck Institute, Germany (also financial sponsor)

This workshop has no military purpose. Its sole aim is to discuss legal and ethical questions related to military robotics.

Invited speakers

|

George Bekey, University of Southern

California

"Some ethical dilemmas in military robotics" |

|

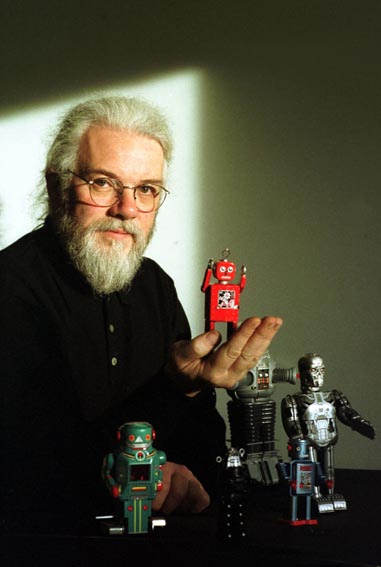

Noel Sharkey, University of

Sheffield and International Committee for Robot

Arms Control

"Do you want our robots to kill and maim in your name ?" |

|

Anthony

Finn, Director of Defence And Systems Institute, University

of South Australia "Lethal Autonomous Robots" |

|

Robert

Sparrow, Monash University

"Working for war ? Military robotics and the moral responsibilities of engineers" |

|

Timothy Bretl,

University of Illinois at Urbana-Champaign

"Thoughts from a robotics researcher" |

|

Paul

Scharre, Center for a New

American Security

"U.S. Department of Defense policy on autonomy in weapon systems" |

|

Stephan

Sonnenberg, International

Human Rights and Conflict Resolution Clinic, Stanford Law School"Why drones are different" |

Abstracts

Some ethical dilemmas in military robotics George Bekey, University of Southern California

The field of military robotics involves a large number of ethical issues, some of which include inherent contradictions. Some of these contradictions arise from the imprecise nature of the field of ethics, which features a variety of definitions and theories. Other real or apparent contradictions arise from the inherent ambiguity in the “Laws of War” (LOW) and the “Rules of engagement” (ROE). In this paper we present and analyze some of these ambiguities and contradictions, and illustrate them with examples. One of the problems arising from these dilemmas is that it may be difficult or impossible to assign priorities to particular actions to be performed by the robots in question. A second difficulty concerns the design of Arkin’s “ethical governor”. At this time it is not clear whether these dilemmas can be eliminated, and it may be necessary to learn to live with them, perhaps by pre-assigning priorities to particular actions.

About the speaker

Dr. George A. Bekey is an Emeritus Professor of Computer Science and founder of the Robotics Research Laboratory at the University of Southern California (USC). His research interests include autonomous robotic systems, applications of robots to biology and medicine, and the ethical implications of robotics. He received the Ph.D. in Engineering in 1962 from the University of California at Los Angeles (UCLA). During his USC career he was Chairman of the Electrical Engineering Department and later of the Computer Science Department. From 1997 to 2001 he served as Associate Dean for Research of the School of Engineering. He has published over 240 papers and several books in robotics, biomedical engineering, computer simulation, control systems, and human-machine systems. His latest book, entitled Autonomous Robots – from Biological Inspiration to Implementation and Control was published by MIT Press in 2005. He is the Founding Editor of the two major international journals in robotics. He is co-editor of Robot Ethics (MIT Press 2012).

Dr. Bekey is a Member of the National Academy of Engineering and a Fellow of the Institute of Electrical and Electronics Engineers (IEEE), the American Association for Artificial Intelligence (AAAI), and the American Association for the Advancement of Science (AAAS). He has received numerous awards from professional societies and from USC.

He officially retired from USC in 2002, but continues to be active on a part-time basis at the University, as well as in consulting and service on the advisory boards of several high technology companies. Dr. Bekey is also affiliated with California Polytechnic University at San Luis Obispo, where he is a Research Scholar in Residence and a Distinguished Adjunct Professor of Engineering.

Do you want our robots to kill and maim in your name ? Noel Sharkey, University of Sheffield and International Committee for Robot Arms Control

The rapid development towards the development of autonomous weapons systems places us on the doorstep of the full automation of warfare. Autonomous weapon systems are weapons that once activated can select targets and attack them with violent force without further human intervention. As roboticists it is our social responsibility to consider the application of the technologies that we are helping to create. This talk will examine some of the issues that makes themove towards the automation of warefare unpalatable: (i) the inability of the technology to comply with International Humanitarian Law; (ii) ethical issues concerned with delegating the kill decision to a machine; (iii) new problems for international security and stability. I will also discuss how the "campaign to stop killer robots" (50+ humanitarian Non-Governmental Organisations (NGOs) from more than 2 dozen countries) has been successful in raising the awareness of states to the pending dangers and in getting the issues considered by the United Nations.

About the speaker

Noel Sharkey BA PhD DSc FIET FBCS CITP FRIN FRSA Emeritus Professor of AI and Robotics and Professor of Public Engagement at the University of Sheffield (Department of Computer Science) and journal editor. He has held a number of research and teaching positions in the UK (Essex, Exeter, Sheffield) and the USA (Yale, Stanford). Noel has moved freely across academic disciplines, lecturing in departments of engineering, philosophy, psychology, cognitive science, linguistics, artificial intelligence, computer science, robotics, ethics and law. He holds a Doctorate in Experimental Psychology (Exeter) and a Doctorate of Science (UU), is a Chartered Electrical Engineer and Chartered information technology professional. Noel is a Fellow of the Institute of Engineering and Technology, the British Computer Society, the Royal Institute of Navigation, the Royal Society of Arts and is a member of both the Experimental Psychology Society and Equity (the actors union) for his work on popular robot TV shows.

Noel is well known for his early work on many aspects of neural computing, machine learning and genetic algorithms. As well as writing many academic articles, he also writes for national newspapers and magazines and has created thrilling robotics museum exhibitions and mechanical art installations. As holder of the EPSRC Senior Media Fellowship (2004-2010) he engaged with the public about science and engineering issues through many TV appearances (in excess of 300) and in radio and news interviews. Noel has been the architect for a number of large-scale robotics exhibitions for museums and has run robotics and AI contests for young people from 26 countries including the Chinese Creative Robotics Contest and the Egyptian Schools AI and Robotics competition. He is a joint team holder of the Royal Academy of Engineering Rooke medal for the promotion of engineering.

Noel's core research interest is now in the ethical application of robotics and AI in areas such as the military, child care, elder care, policing, autonomous transport, robot crime, medicine/surgery, border control, sex and civil surveillance. He was a consultant for the National Health Think Tank (Health2020) report, Health, Humanity and Justice, September, 2010, an advisor for the Human Rights Watch Report Losing Humanity: the case against killer robots November, 2012 and a Working Party member of the Nuffield Foundation Report, Emerging biotechnologies: technology, choice and the public good, December, 2012 and is a director for the European branch of the Think Tank Centre for Policy on Emerging Technologies.

Nowadays Noel spends most of his time on the ethical, legal and technical aspects of military robots. He travels the world to talk to the Military, NATO, policy makers, academics and other groupings such as the International Committee of the Red Cross. He was Leverhulme Research Fellow on the ethical and technical appraisal of robots on the Battlefield (2010-2012) and is a co-founder and chairman elect of the NGO: International Committee for Robot Arms Control (ICRAC).

Recommended reading

Noel Sharkey (2014), Towards a principle for the human supervisory control of robot weapons, to appear in Politica & Società

Lethal Autonomous Robots Anthony Finn, Director of Defence And Systems Institute, University of South Australia

Lethal Autonomous Robots (LARs) differ from existing ‘fire-and-forget’ weapons because – although the latter attack targets without human involvement once their programming paradigms are satisfied – military advantage vs. collateral damage estimation is undertaken by humans, who are accountable in law. Decisions regarding the legitimacy of LARs thus hinge on whether they comply with International Humanitarian Law (IHL), which has been extensively codified in a series of international treaties. IHL essentially comprises a set of principles that together seek to temper the range and violence of war and tie in actions of warfighters to morality more generally. If LARs are to comply with IHL, they cannot be indiscriminate. By definition, they must be constrained by paradigms that classify targets by signature or region. Ideally they would balance the key principles of IHL (such as discrimination and proportionality) using sophisticated algorithms yet to be developed; although it remains an open question as to whether they would ever successfully achieve this all of the time as it challenges humans.

About the speaker

Anthony Finn is a Research Professor of Autonomous Systems at the Defence And Systems Institute (DASI), University of South Australia. His primary research interests are in Autonomous and Unmanned Systems (and multi-vehicle or 'systems of autonomous systems' in particular). His research has informed governmental and international bodies and been cited as evidence in a number of criminal trials.

Working for war ? Military robotics and the moral responsibilities of engineers Robert Sparrow, Monash University

Note : the audio recording of the full talk is available on the wiki page.

A number of the scientists who were willing participants in the development of the atomic bomb when they believed it was necessary to defeat the Nazis, had second thoughts when they realised the bomb was going to be used against Japan and, later, that nuclear weapons would be aimed at the Soviet Union. More recently, the role played by military funding in the sciences became controversial during the Vietnam War and the 1980s (with the development of the Star Wars project) because the goals and activities of the military were controversial at these times. I will argue that the ethics of working on military robotics today is, similarly, intimately connected to the nature of the recent wars in Iraq and Afghanistan, as these are the conflicts in which military robots have “come of age” and which are setting the agenda for the design of the next generation of robotic weapons. If it turns out that, by and large, robots are not defending our homelands against foreign invaders or “terrorists” but rather killing people overseas in unjust wars then this raises serious questions about the ethics of building robots for the military in the current period. This presentation will therefore discuss the moral responsibilities of engineers when they accept military funding for their research.

About the speaker

Rob Sparrow is an Australian Research Council Future Fellow in the Philosophy Department, and an Adjunct Associate Professor, in the Centre for Human Bioethics, at Monash University, where he researches ethical issues raised by new technologies. He has published widely on the ethics of military robotics, as well as on topics as diverse as human enhancement, artificial gametes, cloning, and nanotechnology. He is a co-chair of the IEEE Technical Committee on Robot Ethics and one of the founding members of the International Committee for Robot Arms Control.

Recommended reading

Robotic weapons and the future of war

Thoughts from a robotics researcher Timothy Bretl, University of Illinois at Urbana-Champaign

Note : the audio recording of the full talk is available on the wiki page.

Robotics research is not value-neutral. We choose what to work on and who we ask to pay for it. These choices have consequences, the devastation of which in military conflict has become quite clear. As members of academia, we are in a position of privilege that both demands and derives from a responsibility to expose, consider, and act upon these consequences. I will briefly discuss the extent to which this responsibility is being sustained.

About the speaker

Timothy Bretl is an Associate Professor in the Department of Aerospace Engineering, University of Illinois at Urbana-Champaign. His research interests are in theoretical and algorithmic foundations of robotics and automation, motion planning, control, optimization.

U.S. Department of Defense policy on autonomy in weapon systems Paul Scharre, Center for a New American Security

The prospect of increased autonomy in weapons systems raises challenging legal, moral, and policy issues. Of particular concern are the practical challenges associated with designing systems that behave in a predictable manner in complex, real-world environments and that, when they fail, fail-safe. U.S. Department of Defense Directive 3000.09, Autonomy in Weapon Systems, provides a useful framework for understanding these challenges and a model for best practices.

About the speaker

Paul Scharre is a Fellow and Project Director for the "20YY Warfare Initiative" at the Center for a New American Security.

From 2008-2013, Mr. Scharre worked in the Office of the Secretary of Defense (OSD) where he played a leading role in establishing policies on unmanned and autonomous systems and emerging weapons technologies. Mr. Scharre led the DoD working group that drafted DoD Directive 3000.09, establishing the Department’s policies on autonomy in weapon systems. Mr. Scharre also led DoD efforts to establish policies on intelligence, surveillance, and reconnaissance (ISR) programs and directed energy technologies. Mr. Scharre was involved in the drafting of policy guidance in the 2012 Defense Strategic Guidance, 2010 Quadrennial Defense Review, and Secretary-level planning guidance. His most recent position was Special Assistant to the Under Secretary of Defense for Policy.

Prior to joining OSD, Mr. Scharre served as a special operations reconnaissance team leader in the Army’s 3rd Ranger Battalion and completed multiple tours to Iraq and Afghanistan. He is a graduate of the Army’s Airborne, Ranger, and Sniper Schools and Honor Graduate of the 75th Ranger Regiment’s Ranger Indoctrination Program.

Mr. Scharre has published articles in Proceedings, Armed Forces Journal, Joint Force Quarterly, Military Review, and in academic technical journals. He has presented at National Defense University and other defense-related conferences on defense institution building, ISR, autonomous and unmanned systems, hybrid warfare, and the Iraq war. Mr. Scharre holds an M.A. in Political Economy and Public Policy and a B.S. in Physics, cum laude, both from Washington University in St. Louis

Recommended reading

U.S. Department of Defense Directive 3000.09 (2012), Autonomy in Weapon Systems

Why drones are different Stephan Sonnenberg, Stanford Law School

In September 2012, the speaker co-authored a report documenting the inefficacy, unpopularity, and presumed illegality of the US drones program as it was carried out in northwest Pakistan. The main findings of the report have since been confirmed by subsequent investigations and official documents released to the public. In this talk, however, the speaker reflects on the question that was not addressed in the report, namely whether—and, if so, how—drones are different from other weapons designed to kill people during times of conflict? The question is controversial, in that it pits traditional laws governing war against emerging popular notions of ethics in confusing and sometimes contradictory ways. But it is also an important question to address, both to understand the emotional reaction that the US drone program continues to engender at home and abroad, as well as to develop ethical policies to govern the use and further development of drones technology worldwide.

About the speaker

Stephan Sonnenberg is the Interim Clinical Director and Lecturer on Law with the International Human Rights and Conflict Resolution Clinic, which is part of the Mills Legal Clinic at Stanford Law School. He works with law students on applied human rights projects that involve both traditional human rights methods as well as dispute management strategies, for example conducting conflict analyses, facilitating consensus-building efforts, or designing reconciliation or other peace-building measures. Stephan also co-teaches an intensive human rights skills course, as well as an interdisciplinary course on human trafficking. Before joining Stanford Law School in 2011, Stephan worked as a Clinical Instructor with the Harvard Negotiation and Mediation Clinical Program, where he supervised a range of conflict management projects and taught a number of seminars and lecture classes focusing on negotiation, consensus building, and mediation.

Starting in 2011, Stephan and his colleague at the Stanford Law clinic began to critically evaluate the legality of the US drone program, first in Pakistan and subsequently in other countries where the US operates drones. In September of 2012, the Stanford Law clinic – in partnership with a human rights clinic at NYU – published a report titled “Living Under Drones: Death, Injury and Trauma to Civilians from US Drone Practices in Pakistan.” Since the publication of that report, Stephan has been asked numerous times, in a range of different forums, to defend, explain, or reflect on the content of that report and subsequent policy developments that have shifted the debate over drones since that time.

Stephan is a graduate of Harvard Law School, and the Fletcher School of Law and Diplomacy. He also holds a degree in European Studies from the Institut d’Études Politiques in Paris, and an undergraduate degree from Brown University.

Recommended reading

Stanford International Human Rights and Conflict Resolution Clinic

and Global Justice Clinic at NYU School of Law

(2012), Living under

drones: death, injury and trauma to civilians from U.S. drone

practices in Pakistan